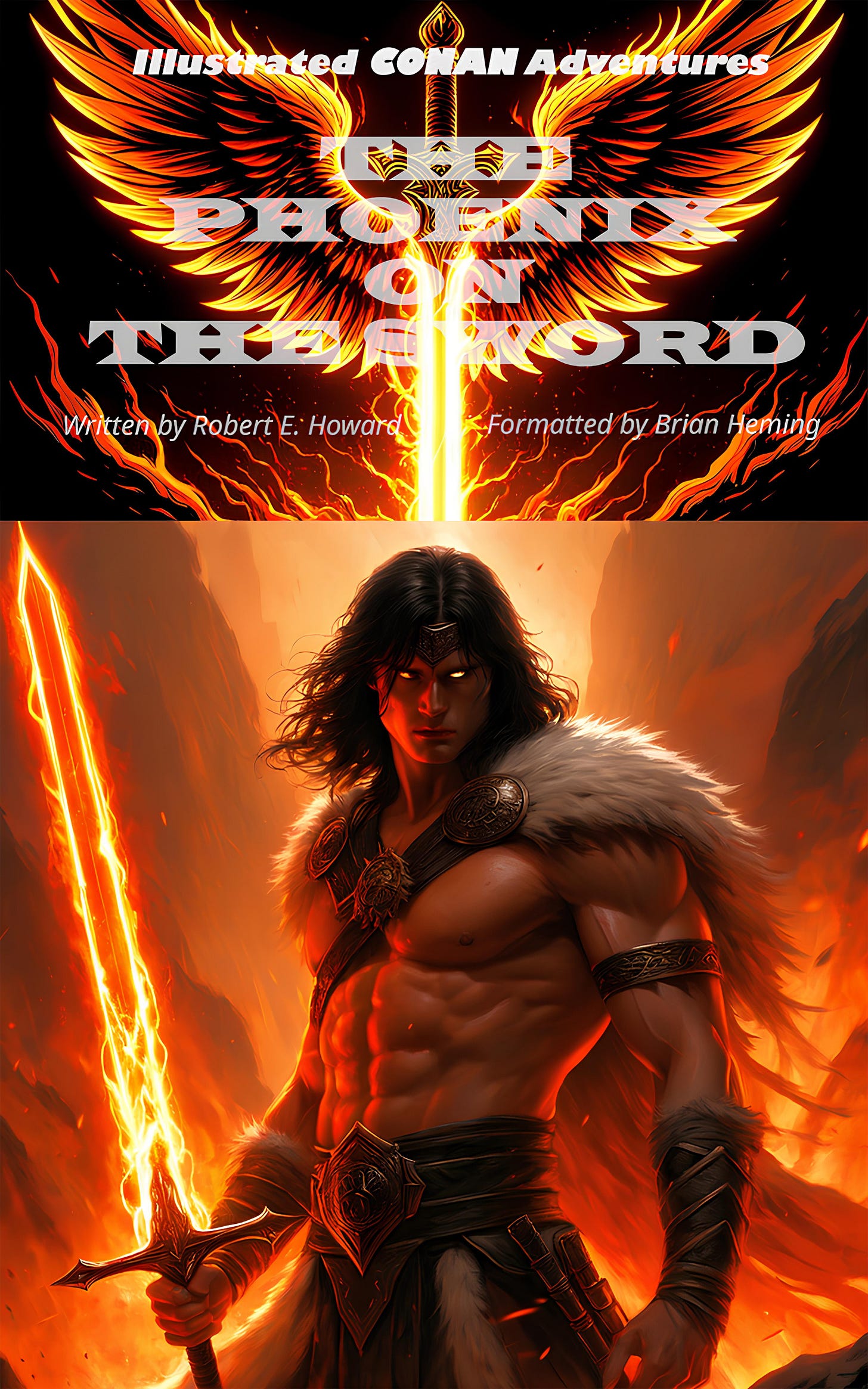

Illustrated CONAN Adventures: The Phoenix on the Sword

Heavy use of neural networks to illustrate a famous story. Now published.

Recently, I’ve been working on semi-automated illustration: using neural network language models to generate prompts for neural network image generators, to make story-relevant illustrations. Given the inconsistency of the images from this approach, it’s still on the human to pick the best images, but given that one can generate thousands of relevant and varied images overnight, one can end up with some good stuff. I decided to point this at a work I love and dearly thought should have a heavily illustrated version: Robert E. Howard’s The Phoenix on the Sword, the first Conan story, first published December 1932. Basic bullet point info up front:

All images I used in the book, free to download: brianheming.com/ica.html

Original story text: Page 51 of PDF of Weird Tales December 1932. Wikisource text copy.

Published on Amazon. Paperback ($9.95) eBook ($1.99) (sponsored links)

The cover:

SPOILER WARNING: Significant discussion of story plot details follows!

This is a composite of two neural net generated images, both from the end of chapter 4: Conan’s sword when Epemitreus magically carves the symbol of the Phoenix upon it, and Conan waking up in his bed in the royal palace, still holding the enchanted sword in his hand. But these are actually after slight human intervention on the prompts. Here are the first versions:

Really cool, huh? The problem is the wings are batty, not phoenix-y. Looking into the prompt we see this:

A bony finger tracing a glowing symbol on the blade of King Conan's sword., mystical energy, ancient magic, bony hand, glowing white fire, mysterious south, Stygian sorcery, Set's worship, arch-demon, demon's mark, sacred symbol, sword with phoenix.This is because Epemitreus explains he’s inscribing the magic symbol to counter arch-demons, which just kind of gets thrown into the prompt by the LLM. Replacing the references to this with references to the Phoenix, generating a bunch of versions in different styles, and picking the best one, we end up with the sword used on the cover, and towards the end of Chapter 4 in the book.

The bottom part of the cover, first version:

I count this as a happy little accident. What happened here is me experimenting with giving the LLM a system prompt to generate image tags, but giving it no examples of what the response should look like. I figured it might make more varied pictures, but less good ones, and I was right. But this one comes as a diamond in the rough, from this curiously formatted, commaless prompt that uses a “coma” instead of a comma:

Conan awakens with sword still clutched in hand coma Phoenix symbol glows on the blade like white fire coma Ancient wisdom lingers in the dream coma Stealthy sound in the corridor sets Conan's instincts ablaze coma Barbarian king readies for battle, armor clanking to life coma Gray wolf alertness takes hold.Adding a few tags, especially on on the negative side, to make Conan less wrinkly and without face-paint, then generating a bunch of large square images and picking the best one, we get our cover image.

But interestingly, this poetic interpretation of the prompt comes from an older image generation model. Using a variant of the most recent Stable Diffusion, we end up with a much more literal, less fun interpretation of Conan waking up, which I used in the actual illustration of the book:

So, we see that the purely algorithmic approach gets some pretty cool pictures, but for important images like the cover, or being accurate to the text of the story, it still pays to do some image prompt twiddling and extra human selection.

Also, it occurs to me that we still may be able to get more “poetic interpretations” by plugging in a simpler CLIP model to the image generation of a recent model, since it may be largely a property of the CLIP interpretation of the prompt that we just kitchen-sink throw everything into a poetic interpretation in the earlier model. Something to try out after I post this, I suppose.

More stuff!

So, it turns out this approach worked rather well for the first four chapters of the book, where not a lot of combat happens. In fact in a lot of these cases I just picked an algorithmically generated image with no further twiddling beyond a crop, because it was so good and appropriate. For example:

But for Chapter 5, where Conan fights his way through a huge band of assassins described in a lot of precise detail, the results were initially not so good. Why?

One thing is that Chapter 5 often refers to Conan as “The King”, particularly when the courtiers are talking to him after the battle. Kings in model training data probably wear crowns, are old, and have beards and thrones, none of which apply to Conan. So we end up with stuff like this, which I of course didn’t select:

There’s also the issue that Robert E. Howard only briefly describes Conan’s armor, and by the time we’re late in the narrative, our LLM may stop putting into the image prompt, even if our system prompt tells it to always tell us his armor. Of course, the problem gets worse if we experiment with not using story history and only generating prompts for the last N paragraphs, which may not include the armor. And many depictions of Conan constantly display him as shirtless even for stories which Robert E. Howard puts specific and detailed descriptions of his armor in, so we have a training data bias towards drawing Conan unfaithfully in terms of his equipment, something which I strongly disagree with.

To resolve this I ended up pre-editing Chapter 5 before sending it into the LLM, replacing “the king” with “Conan” or “Conan in a cuirass” as befitted the situation.

The armor and weapons characters use varies from person to person but is generally accurately described, so if we’re purists who wish the image to capture these correctly, we need the image generators to give people different equipment, something most models are bad at. Of course this can be solved a variety of ways, but most of them are manual: editing the resulting image in GIMP, manually collaging individual generated characters into a background, using inpainting, or, in theory, generating the characters in separate images then using models to stitch them together. Anyway, for this project I’ve stuck to #1. Of course we could theoretically detect the situation of multiple differently-equipped characters using our language model and do some complex generation pipeline to try to address it, but for this project, I’ve kept it simple and just spammed out images until we get one close enough.

Anyway, as I alluded to, we get all sorts of confusion regarding equipment. This is especially bad for this Conan tale, where Conan uses an axe against everyone except Gromel the giant, but popular depictions usually portray him as using a sword.

I may do a more detect-and-pipeline solution if I do more Conan stories and this keeps coming up, because one on one fight scenes are a big deal, and it isn’t an illustrated Conan book unless you illustrate some battles.

Anyway, the good news is that you still get some good ones, and indeed a fair number of “keep” images in Chapter 5 ended up coming directly from the LLM→Imagegen pipeline, even though I spent a lot of time trying more manual generation, regeneration, and tag twiddling for chapter 5. This even includes one of the combat images, though not a 1 on 1:

I also got some fairly good ones for Conan vs. Volmana the dwarf, though I ultimately went with one from the huge number of regeneration / tag twiddle images I spammed out at the end.

So, bullet point roundup. How’d it go for semi-automated illustration?

Great for all sorts of images.

Occasionally produces amazing stuff you never expected and wouldn’t come up with yourself.

Also produces lots of bad stuff; has to have a human pick up the good.

In some cases, requires significant post-generation editing.

Pretty bad at certain things, such as one on one combat with textual accuracy as to equipment and attire, but can still serve as a springboard from which to get better images.

This being Substack, there’s probably an obligation to opine regarding the greater societal impact blahbity-blah of the use of neural networks for this. Well, in this particular case—public domain story with a dearth of illustrated versions—I see substantial positives with very little in the way negative externalities. We get a detailed illustrated version of a classic story, which never got one probably due to its rights being orphaned then afterward there being no profit in illustrating a public domain story many people would simply download for free. We get free images available for anyone to use for all sorts of future projects. And probably no artist fails to get paid to illustrate it, because in the 88 years since this story was published, no artist did end up getting paid to illustrate it.

As for the broad societal impact of the use of these technologies and the moral and legal issues regarding their training data and outputs, that’s a topic for a different Substack post, probably by someone else.

If there’s other classic public domain stories you’d like me to illustrate by such methods, or even another classic Conan story you’d love to see this treatment for, let me know! It was a pretty fun project, and I love the final result. If you want it for free, you can read the public domain story, and download the images for free. If you’d like to pay for it, here are the Amazon links again:

Paperback ($9.95) eBook ($1.99) (sponsored links)

Thanks for reading!