Making Illustrated CONAN Adventures: Black Colossus

Exploring the limits of algorithmic illustration and multimodal models

I made a heavily illustrated version of the fourth published Conan story, Black Colossus by Robert E. Howard, first published June 1933. Basic bullet points up front:

Published on Amazon. Paperback ($11.99) eBook ($1.99)

Original story text: Page 3 of Weird Tales June 1933 Wikisource copy

All images I used in the book, free to download: brianheming.com/ica04.html

Intended Methodology:

(Automatic) Use language models to generate image prompts of two types: “detail” images that focus on a single detail of the scene, likely a closeup, and “general” images that interpret the scene as a whole.

(Automatic) Run this over random divisions of the paragraphs until we have thousands of total images, with many for each paragraph.

(Automatic) Make a web page showing all generated images inline between the paragraphs.

(Theoretically Automatic) Use an image+text → text model to pick candidates for the best ones for each paragraph.

(Manual) Pick the best ones and either just crop them to the desired form, or do further twiddling.

Actual Methodology:

Do the first three steps of above, futzing around with different language models and image generation models, generating 27,000 images in the process.

Experiment with using multimodal image+text → text models throughout to pick the best images, but conclude it was necessary to primarily choose manually still.

Stop generating images and just take the 27,000 images and use a combination of cropping, neural-network-assisted digital editing, and regeneration with twiddling to get the final images used in the books.

Models used:

Language models for prompt generation: Dolphin3.0-Llama3.1-8B, gemma3:12b-it-qat

Image generation models: fluxFusionV24Steps, shuttle-3.1-aesthetic, shuttle3, DreamShaperXL

Multimodal models for scoring: gemma3:12b-it-qat, dolphin-vision-7b

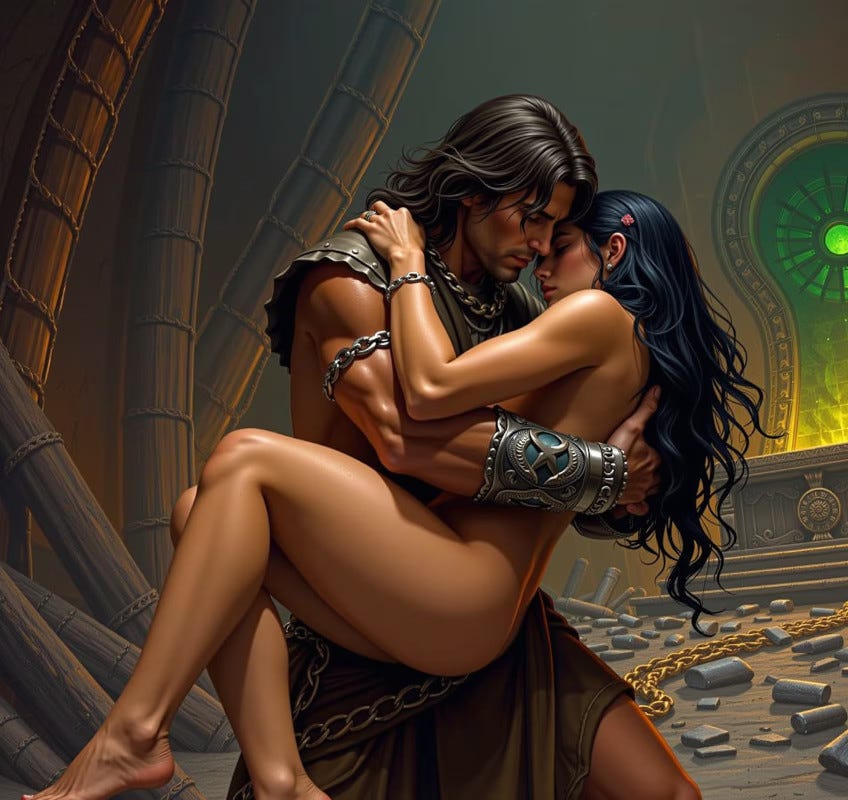

The cover: (SIGNIFICANT DISCUSSION OF PLOT DETAILS a.k.a. *SPOILERS* BELOW THIS POINT. MILD ALGORITHMICALLY GENERATED NUDITY BEYOND THIS POINT.)

This is a composite of two images, both further refinements of images from the book. The top image comes from Chapter 2, when Princess Yasmela asks Conan about why her kingdom is in trouble, meanwhile charming him with her beautiful eyes. However, the one found in the book is significantly lower resolution: 1152x858.

Amazon Kindle covers need to be 1600x2560, though, so we upres the image to bring it to 1600+ width. This can easily be done with a generic upres and refine, and in krita-ai-diffusion there’s a handy tab to do this quick from the UI:

However, I find I get better images by upresing the image then refining the image at lower strength and the same prompt. For a cover image we care enough to bother, and we can just have ComfyUI churn out new versions until we like one. Here’s the pipeline I used:

Relevant parts circled in red. What’s going on here? Well, we load the image, increase its resolution by 1.39—bringing it from 1152 wide to 1601 wide—by simply interpolating the pixels from their neighboring ones, no neural networks required. Then we encode it via the VAE, refine the image at 50% denoise (needs to be less than 1.0 or we’re basically just generating a new image), VAE decode, and save it. The CLIP prompt is the prompt I originally generated the image with. I then just ran the generator on instant-requeue until I got an image I liked. Note that this method will slightly change the image, but hey, perhaps you’d rather the image was subtly changed in ways you like better, right?

Incidentally, the image I used for the chapter wasn’t the actual one generated by the LLM→Imagegen pipeline. Actually, I took the best image I got from that and refined it. The problem is that the best image had Conan unarmored, which was not textually accurate:

Conan’s supposed to be in chainmail. So I twiddled the prompt then did pretty much the same workflow I used in the upscale, sans upscale. I added shirtless, topless to the negative prompt, and explicitly specified his armor in the positive prompt:

We need a bit higher strength on this one since we’re completely rewriting Conan’s looks here, but it works pretty much the same, and our output image still keeps the general shape and composition of the input image.

Now for the bottom half of the cover image:

Black Colossus is the first Conan story to feature the later-iconic plotline of rescuing in a damsel in undress. This image comes from the end of the final chapter, where Conan rescues a now-naked Princess Yasmela from an evil sorcerer just as he’s about to sacrifice her—and of course, he has to rip off all her clothes before sacrificing her. For reasons. Of magic wizard stuff. Yeah.

So yeah, in our mostly-textually-accurate book illustrations, this is the image (I ALREADY WARNED YOU ABOUT NUDITY ABOVE OKAY?)

Yeah, yeah, it’s fine, right? No nips, right? But the thing about Amazon covers is that the erotic fiction authors have gamed out what gets your book blocked on Amazon quite extensively, and found that any implication that one of the characters is not wearing pants, or that a female character is not wearing a top, whether the privates are revealed or not, is sufficient to get your book blocked and your account potentially banned. So we have to get some clothes on these characters! Here, I worked in krita-ai-diffusion, essentially just pasting some big ol’ brown polygons where her bikini top and bottom would be, and a grey blob where Conan’s midriff armor would be, then refined the image with this prompt which was the original prompt minus the naked, and negative-prompting nudity:

A close-up view of Conan the Barbarian in chainmail, holding dark-haired Princess Yasmela's richly-dressed body tightly as she clings to him with convulsive strength. Their embrace is passionate and desperate, reflecting the chaos and destruction that surrounds them. The room still radiates an unholy glow from the black jade altar, but it seems less intense now that the sorcerer's power has been broken. The atmosphere is charged with emotions as both Conan and Yasmela grapple with the aftermath of their harrowing experiences. Despite the carnage that has occurred and the work that lies ahead, they find solace in each other's arms, affirming their bond amidst the ruins of a once-powerful sorcerer's lair. -text, watermark, signature, shirtless, topless, naked, chain

Note that this is a natural language prompt. If refining an image with lots of natural language in krita-ai-diffusion and its default models, your options for model are probably Flux and Flux (schnell); 1.5 models and XL models essentially work on the tag-based style of prompting.

More stuff! Trying out image models.

I started off doing this book with two basic ideas: try out some different image generation models, and try out using a multimodal model to do image scoring, instead of having a human look through tens of thousands of images himself. Pursuant to this, I did two proof-of-concept chapters which I posted to substack. First one, I tried using shuttle-diffusion instead of flux for image generation. Like flux, this is a natural language model that can generate good images in just four steps. After I completed my previous illustrated Conan book, The Scarlet Citadel, someone suggested giving it a whirl. So here’s my prototype chapter 1 illustrated primarily with shuttle 3.1, prompts generated by dolphin3-8b:

I found shuttle produced nice images, but in general was not as good as prompt-following as flux. Shuttle 3.1 also had its own particular art style and was not good at following style tags like “anime style” or “graphic novel style”, which was not such an issue with Shuttle 3. Anyway, one thing I noticed when I started illustrating the actual book was that the images were not textually accurate: Shevatas, the thief who is the main character of the chapter, is described as dark-skinned and naked except for a red loincloth. As the chapter wears on the language model loses this context and stops describing him, even if we prompt it to, and we end up with a generic dark-haired white dude in dark clothing, the model’s idea of a thief. Fine. We could of course try fancier LLM-based solutions, having a model edit the prompts to put in his appearance, or we could just search-and-replace Shevatas with a description of him everywhere in the text so the context is not lost—I did this with chapters 2 and 4 to force Conan in chainmail everywhere Conan is mentioned. But at this point I was sick of generating more images, sitting on more than 20,000, so I just inpainted all the images I used in the prototype, selecting Shevatas in krita-ai-diffusion and inpainting him as a Pakistani thief in a red loincloth. Worked fine. Hooray for inpainting.

Anyway, while I liked the images well enough, shuttle didn’t cut it for the more complex images of the later chapters, as its prompt following wasn’t good enough to do crazy stuff like a chariot pulled by a black camel driven by a demon monkey with a green-robed sorcerer in it kidnapping a princess with Conan on a horse chasing after it. Actually I’m not sure any model can handle all of that, but flux comes a lot closer.

I also tried DreamShaperXL, which generates images much faster than natural-language models and worked quite well for The Scarlet Citadel. For this one it only really produced useful results for chapter 1, as tag-based image generation doesn’t do well for more than one character on-screen, not having good ways to specify attributes for only a single one of the characters. In this story, only chapter 1 is solo-character-focused, and of course I’d already illustrated it with shuttle3.1.

More stuff! Trying out scoring models.

When making my last illustrated Conan book, The Scarlet Citadel, I ended with the idea of replacing using my human eyes to pick good images from over 10,000, with offloading some of that work to a computer. I tried a large number of multimodal image+text→text models small enough to work locally on my GPU, and found most of them terrible. Some would insist that any picture, including a random one of a giraffe, was exactly what was described in the text. How a giraffe could be Conan slashing a monster with a sword is anyone’s guess. Facebook’s Llama-3.2 did all right, but randomly decided a picture of a door was pornography and refused to rate it—there are ways around stupid refusals, but I’d rather not use models that do this, particularly for Conan stories involving gore and nudity. Ultimately, only two models ended up producing useful outputs: gemma3, and dolphin-vision—though after I released the book, I found the recently released Mistral-Small-3.2-24B-Instruct-2506 does very well in testing. This is a prototype I did for chapter 2 using gemma3:

Black Colossus, chapter 2: CONAN with Pure Algorithmic Illustration

Image prompts generated by Dolphin3.0-Llama3.1-8B. Images generated by fluxFusionV24Steps. Images scored by gemma3:12b-it-qat on Artistry, Poeticness, Nonweirdness, and Textual Accuracy. All images with one-pass score > 37.0/40 included (8 images out of 1490 scored), including nudity. All images after Conan appears with score 37.0/40 included (9 images)…

The images are far worse than a human would pick. When analyzing the model’s outputs, it turned out this isn’t just inaccuracy on the part of the model: gemma3 would actively downrank images with a muscly Conan, a beautifully-dressed princess, or textually-accurate portrayal of dark magic, stating that it deemed these features “weird” then giving low rankings, despite being told only to rank nonweirdness based on image generation artifacts like extra hands and flying swords. Gemma3 is Google’s model, and likely reflects Google’s lobotomization for anti-human political bias here. Of course, for the prototype I didn’t instruct the model to pretend it’s a Conan fan who likes beautiful princesses and dark magic, so there’s possibly some counterplay I could do in the prompting.

I added a category weighting and played with the weights here, and found that by reducing the weight of nonweirdness very low and counting textual accuracy double, I could get it to rank the best images somewhat high. Anyway, I figured by adding a number of categories (e.g. Beauty, Artistry, Poeticness, Nonweirdness, Appeal to Men, Sexiness, Action, Specificness, Appeal to Conan Fans), using a less politically-lobotomized model, prompting the model to pretend it’s a Conan fan, and using a small regression model to weight the category scores to match my human preferences, I could likely get some pretty good results, at least for picking a top 10 for a human to further pick from. But I gave up on it until after making this book, figuring that once I’d made the book, I could use the images I picked in making the book to test scoring models and train category weighting models.

Hard Cases

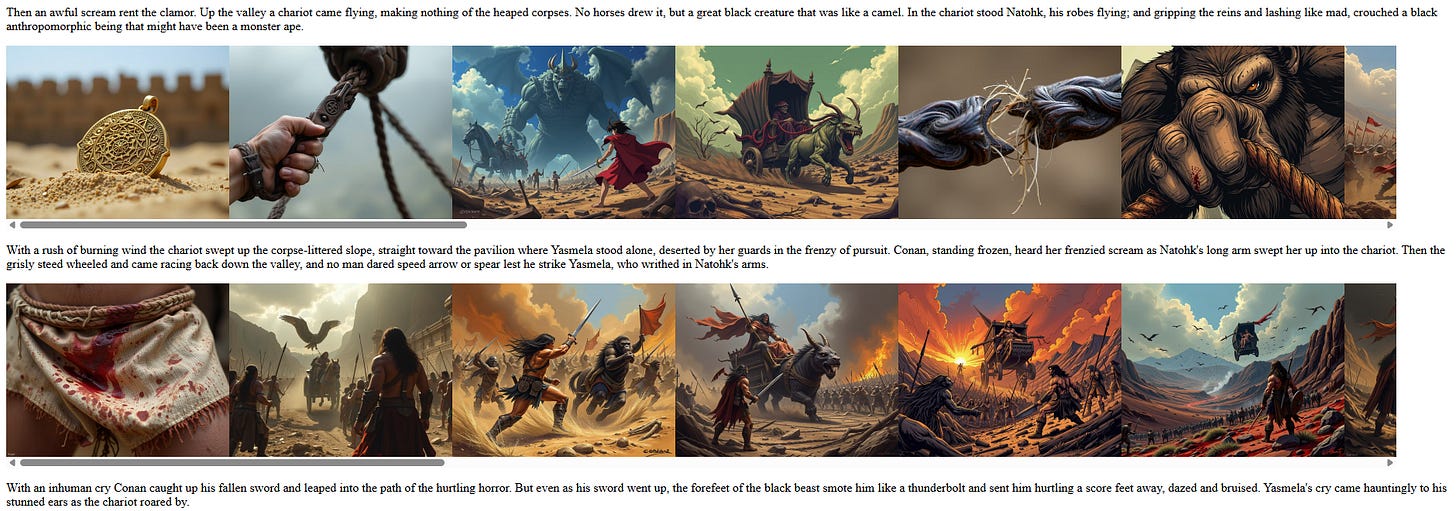

Some images are easy for text-to-image generators; some are hard; some are basically impossible. They’re great at landscapes. Pretty good at any closeup that involves a single main character. Bad at putting two very different looking people in a scene without mixing up their attributes. Terrible at things they have little-to-no training data for, which includes gore for most models, but also includes stuff like riding a horse without a saddle or bridle. Useless when it comes to one-shot generating a single image with a lot of different specifically-described weird dudes in it. So of course we come to the hardest image of the story, from chapter 4:

If you can read the tiny text, you see it describes a chariot drawn by a black camel, driven by a monstrous anthropomorphic black ape, with the robed sorcerer Natohk standing in the back, kidnapping princess Yasmela, then being leaped at by Conan. Ouch. Not a surprise that the resulting images aren’t exactly accurate. The short answer to doing these images is “inpainting”, though “collage” would also work. Get the best thing you can get out of a model, which probably only contains two of the elements, then either use an inpainting model to fill in the rest, or generate the other elements separately and paste them in using a photo editor such as GIMP. Anyway, for this one specifically, I took the best image I had and looked up its prompt, intentionally removed the sorcerer Natohk from the prompt, generated images until I liked one, then inpainted a sorcerer in green robes (Natohk) into the back of the chariot. So in pictures worth a thousand words:

Regenerate twiddling Natohk out the prompt and fixing the driver to be the ape:

With krita-ai-diffusion, inpainting a masked green-robed sorcerer standing in the back seat then cropping:

Wrap up thoughts

One thing I did too much of on this project was try stuff out—new image generation models, different language models for prompt generation, multimodal scoring models, futzing around with methodology. The result was that I had far too many images at the end and published the book months later than intended. Probably, I should’ve picked only one major thing to try, whether different models, image scoring, or something else. Still, I was able to nip it in the bud by just refusing to run more automated generation and running with what I had.

The idea of offloading aesthetic judgement to the machine is probably a bit early, but we can at least get the machine to throw away images that totally suck and save some human mental budget. The big problem I see with model-based scoring is the compute expense: the simpler image generation models can generate an image in 5 seconds, and I had flux generating an image every 20 seconds on my laptop with constantly changing complicated natural language prompts. But currently, models capable of understanding the text of a Conan story AND judging whether an image is good in that context take long enough to run we’re giving up a lot of images generated if we want to score them afterward. Of course, there are probably some optimizations we can do here. And if we did get good aesthetic judgement consistently we could quietly illustrate the heck out of everything public-domain without a human doing more than run a script and go to bed, perhaps just cropping the resulting images to his taste in the morning.

Finally, the same closing I wrote for my last Illustrated CONAN Adventures book:

This being Substack, there’s probably an obligation to opine regarding the greater societal impact blahbity-blah of the use of neural networks for this. Well, in this particular case—public domain story with a dearth of illustrated versions—I see substantial positives with very little in the way negative externalities. We get a detailed illustrated version of a classic story, which never got one probably due to its rights being orphaned then afterward there being no profit in illustrating a public domain story many people would simply download for free. We get free images available for anyone to use for all sorts of future projects. And probably no artist fails to get paid to illustrate it, because in the 92 years since this story was published, no artist did end up getting paid to illustrate it.

As for the broad societal impact of the use of these technologies and the moral and legal issues regarding their training data and outputs, that’s a topic for a different Substack post, probably by someone else.

If there’s other classic public domain stories you’d like me to illustrate by such methods, or even another classic Conan story you’d love to see this treatment for, let me know! It was a pretty fun project, and I love the final result. If you want it for free, you can read the public domain story, and download the images for free. If you’d like to pay for it, here are Amazon links again:

Thanks for reading!

Amazing article, thank you for showing your process in detail! How long did it take to generate 27k images?