Making Illustrated CONAN Adventures: The Scarlet Citadel

Algorithmic illustration, language models, and image generation

I made a heavily illustrated version of the second published Conan story, The Scarlet Citadel by Robert E. Howard, first published January 1933. Basic bullet points up front:

Published on Amazon. Paperback ($9.95) eBook ($1.99)

Original story text: Page 51 of Weird Tales January 1933 Wikisource copy

All images I used in the book, free to download: brianheming.com/ica02.html

Methodology:

(Automatic) Use language models to generate image prompts of two types: “detail” images that focus on a single detail of the scene, likely a closeup, and “general” images that interpret the scene as a whole.

(Automatic) Run this over random divisions of the paragraphs until we have thousands of total images, with many for each paragraph.

(Automatic) Make a web page showing all generated images inline between the paragraphs.

(Manual) Pick the best ones.

(Manual) Either just crop them to the desired form, or do further twiddling.

Models used:

Language Model: mostly Dolphin 3.0 8B

Image models: mostly variants of DreamShaperXL (using tag-based prompts) and Flux-Fusion (using natural language based prompts)

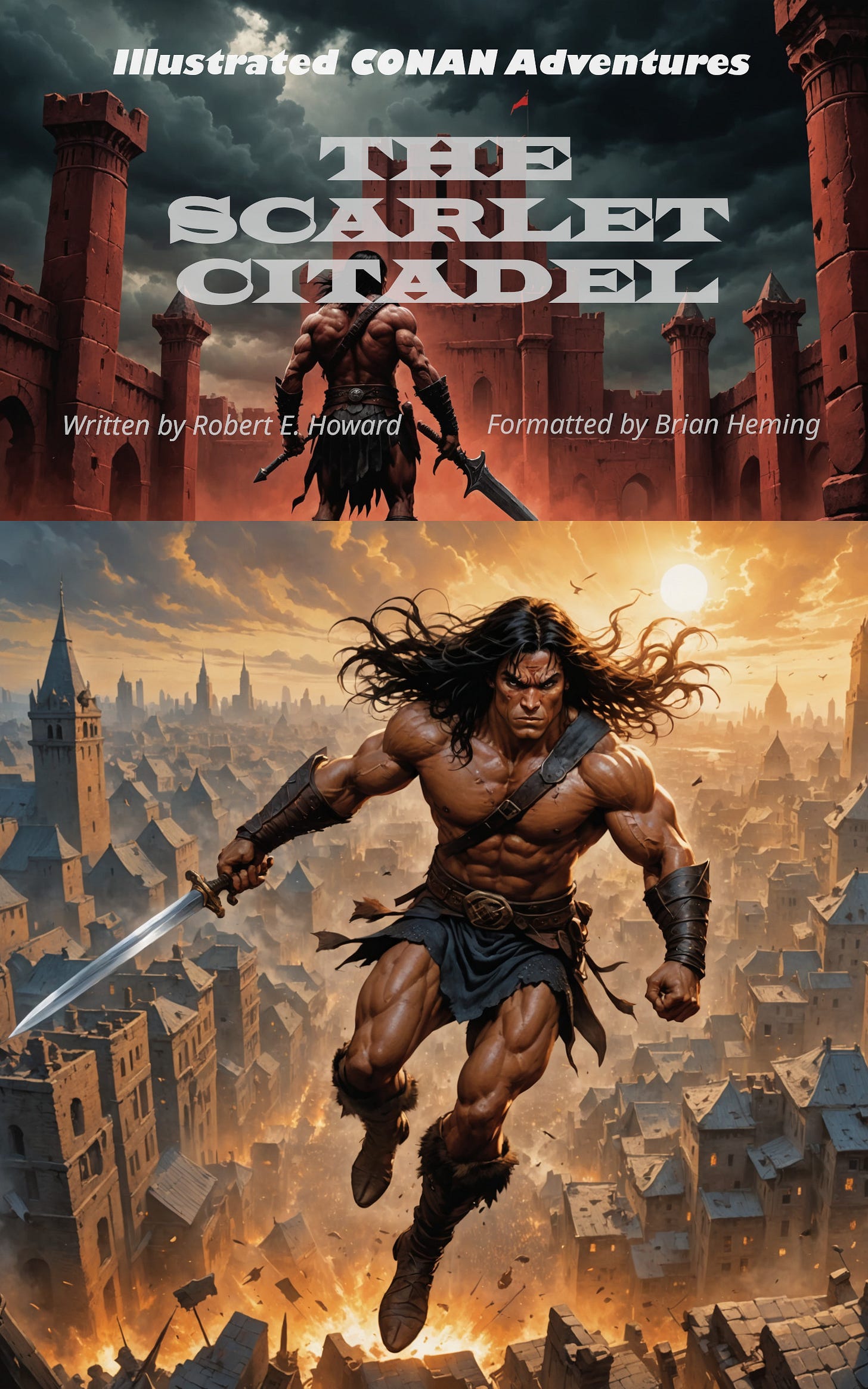

The cover: (SIGNIFICANT DISCUSSION OF PLOT DETAILS a.k.a. *SPOILERS* BELOW THIS POINT)

This consists of two images, both from DreamShaper using tag-based prompts. The bottom image comes from the end of Chapter 4, where Conan jumps off his supernatural winged beast on his return to the capital, then fights his usurper on top of the walls of his capital city:

We see that the tag-based approach isn’t so great for textual accuracy, combining the winged creature and Conan a high percentage of the time, but makes for really great poetic interpretations that make for good covers. Most of the rest of the images in the book ended up being via Flux with natural language prompts, because textual accuracy was the more important consideration. (Aside: inter-image consistency seems to be the priority of many other illustrators; I totally disagree with this and would rather see Conan in three different styles with the correct armor than one consistent style wearing a loincloth when he’s supposed to be fully armored. In this scene he’s supposed to be half-naked as he’s just returning from escaping a dungeon.)

I should also comment that KDP covers are 2560x1600 and paperback cover images even larger for full-res, but pre-Flux models struggle to generate anything that veers too far from 1024x1024; I largely used 1152x896 as my standard first-pass image size. In the past I’ve solved this via generic upscaling models doubling or quadrupling the image size, then scaling and cropping down to 1600 wide, but for this one, I used a possibly better way, that appears to produce better—or at least different—images:

What’s going on here? Well, we generate our first image at 1024 width, upscale it by 1.57, then re-apply our image generation for a few steps at 50% denoise; this particular one I used for the top part of the cover, producing a 1608-wide image which I then cropped down to 1600. This has the effect of fine-tuning the image using the extra resolution in order to fit the prompt, rather than just guessing by the image, and allows us to twiddle things via the count of steps. It seemed to make it sharper and more Conan-y than a generic upscale, and allows us to take an image we already like and make it bigger and better.

More stuff!

In my previous illustrated Conan book, I had a lot of grief fighting against the tendency of tag-based image generation to combine the attributes of multiple characters into one. This is still a problem with the more recent natural-language image generation, but less so. One is likely to get two characters, but they’ll still bleed their attributes into each other. This is worst seen when the prompt gives no idea what one character looks like. For example, we have this prompt for the eunuch Shukeli, who corpse is manipulated by the sorcerer Pelias to free Conan from the dungeon:

We see that he’s surprisingly Conan-esque, with his blue eyes, squarecut hair, and tight muscles. Shukeli is actually described elsewhere as being a fat eunuch, but since “Conan” is the only word our model has to grab onto to describe him, it ends up Conan-ifying him. So for the Shukeli-frees-Conan scene, I edited the prompt to remove Conan’s name entirely and describe Shukeli as a fat eunuch. If we don’t do both, he won’t look right.

In terms of one-on-one fights, a problem I faced with the methodology I used for the first book, this book has essentially 3 big ones: Conan vs. Prince Arpello (on parapets), Conan vs. King Strabonus (on horseback), Conan vs. the wizard Tsotha-lanti. I came into this all raring to use inpainting to achieve 1-on-1s if necessary, but it turned out to only be necessary in the last fight. The first two, simply running a bunch of re-seed regenerations and minor prompt twiddles on the most promising-looking images was sufficient. Conan vs. Tsotha is complicated, with a background explosion, particular described armor and weaponry for Conan, a severed head held by an eagle soaring upwards, and Tsotha’s headless body, so it’s not surprising that no amount of natural language will make a model get every element right. Still, this didn’t take much modification—I was able to do it simply moving by Tsotha’s severed head to the eagle’s claws, though I also modified Conan’s sword a little.

Wrap up thoughts

At the second book for this methodology, it already works pretty well. Natural language and a whole lot of tries can get textual accuracy pretty high, though Conan in particular has problems when it comes to armor accuracy, particularly in places where his armor is only described multiple paragraphs after the scene we’re trying to depict.

Labor-wise, it’s still a slog to go through 10,000+ images and pick the best ones, even with a webpage and a scrollbar. Theoretically, this is somewhat automatable by using a multimodal model, feeding it the text and a given image, and making it score the image on various things, like scene accuracy, relevancy, artistic merit, and weird-stuffness. I haven’t tried it, and don’t know if a multimodal would be anywhere near as good as a human at this. Something for next time, anyway.

I may consider doing a lower-effort version of this and just lazy-illustrating some classic public domain stuff on brianheming.com at some point. By the standards of free-internet-available public domain illustration, we’ll already be beating most of the crowd even just using randomly chosen mostly-poetically-accurate DreamShaper images, let alone natural language + Flux plus picking the highest scores.

Finally, the same closing I wrote for my last Illustrated CONAN Adventures book:

This being Substack, there’s probably an obligation to opine regarding the greater societal impact blahbity-blah of the use of neural networks for this. Well, in this particular case—public domain story with a dearth of illustrated versions—I see substantial positives with very little in the way negative externalities. We get a detailed illustrated version of a classic story, which never got one probably due to its rights being orphaned then afterward there being no profit in illustrating a public domain story many people would simply download for free. We get free images available for anyone to use for all sorts of future projects. And probably no artist fails to get paid to illustrate it, because in the 92 years since this story was published, no artist did end up getting paid to illustrate it.

As for the broad societal impact of the use of these technologies and the moral and legal issues regarding their training data and outputs, that’s a topic for a different Substack post, probably by someone else.

If there’s other classic public domain stories you’d like me to illustrate by such methods, or even another classic Conan story you’d love to see this treatment for, let me know! It was a pretty fun project, and I love the final result. If you want it for free, you can read the public domain story, and download the images for free. If you’d like to pay for it, here are Amazon links again:

Thanks for reading!

As someone who made regular use of AI image generator, I can only imagine how tough and tedious it is just to find the right image (even if the AI knows how Conan looks like). I am not at all surprised that you have to slog through 10,000+ images just to find the right one. Kudos.