Generate a scoring model based upon top choice data

...This is to make Conan books. Seriously.

When ranking items, one generally scores each item, then puts them in order based upon which one has the highest score. But suppose one already knows which item is the best for a number of cases, for which one has various features. Is there a way to get a scoring function that will rank the winning item best, given our training data?

The funny thing is I can easily do it off the top of my head for training a deep neural network via backpropagation for this: we just softmax the individual scores, pick a loss function, and backpropagate the value 1 for our winner and the value 0 for our losers. But more exact ways of calculating this function using shallow models such as, for example, logistic regression for a linear combination of the features, I did not find particularly intuitive. Anyway, after poring through various sklearn API documents to see if someone had already made an easy way to do this, I decided to roll my own based on pairwise comparison. The steps:

Generate 2*(N-1) training data examples for each “best item” data point: the pairwise comparison the best example with each other example in the set, both with the “best” item in first place and with the “best” item in second place. Generate X data as the concatenation of features of the first item and second item, y data as which of the two items in the pair is best.

Make a logistic regression model, predicting the probability that the first item of the two is best.

Pull out the coefficients of for each feature (each feature is represented twice, first for the first example, then for the second example.), subtracting the second coefficient from the first.

This gives us a series of numbers we can multiply by each feature to get a score. Highest score is highest rank. Done. Source code at bottom.

Anyway, I half expect someone to march into this post and tell me I’m dumb and just needed to call someone else’s sklearn API of MakeLinearScoringFunctionBasedUponTopKOfNData or something. If there’s a simple built-in way I missed in my pore-through of API documents, please tell me.

Why it’s relevant to Conan

I make Illustrated CONAN Adventures books via picking the best images out of thousands generated algorithmically. This creates a lot of example data of what is the “best” image. I’d like to use multimodal language models to score these instead, but there’s probably a better way than giving it tons of categories to score on a scale from 1 to 10 then naively adding them. This technique allows me to find a simple formula I can multiply the individual category scores by to get choices which match my human taste. More information on how I made the books so far:

I used an image+text → image language model (Qwen3_VL_8B quantized to Q4_K) to score thousands of images that I generated for Black Colossus, including the ones I used for the actual book, used the ones I used for the book as the winners for their particular paragraphs, built a model using the images from chapter 1, and tested the scoring function on the images for chapter 2. Ideally, the images I picked would come out close to the top, for a good scoring function.

I scored things on a scale of 1-10 on ten categories: Artistry, Poeticness, Physicality, Textual Accuracy, Specificness, Action, Sexiness, Appeal to Conan Fans, Awesomeness, Coolness. The model was fed the image and a number of paragraphs of the text of the story that occur immediately prior to the image. A typical model output looked like this:

Got it, let’s go through this step by step. The image shows a woman in a red dress with a sword, walking down a cobblestone street between tall buildings with lanterns. There’s a large domed building in the distance, banners, and a hazy sky.

First, the text’s final paragraph: Yasmela meets Conan, a mercenary in chain-mail and basinet helmet. The key elements here are: Yasmela (princess) walking alone at night, street with cressets, cobblestones, the mercenary (Conan) with chain-mail hauberk and basinet helmet.

Let’s check each metric:

Artistry: The image is visually rich with lighting, composition (leading lines of the street), warm tones. Good atmosphere. 9/10.

Poeticness: The scene has a dreamy, almost cinematic quality with light and mist, evoking the mood of the text. 8/10.

Physicality: Check for extra limbs, nonsensical swords. The woman has a sword, looks normal. Buildings, street—no obvious artifacts. Good physical realism. 9/10.

Textual Accuracy: The scene is Yasmela walking down the street, alone at night. The image shows a woman (Yasmela) in a long dress, sword, on a cobblestone street. The street has lanterns (cressets), buildings. The distant dome matches the city’s architecture. The text mentions a mercenary (Conan) in chain-mail and basinet helmet, but in the image, Conan isn’t there yet—he’s about to appear. The image captures Yasmela’s walk, which is the scene before she meets Conan. So the image depicts the setting at the end of the text’s passage (when she’s walking down the street, before Conan appears). So textual accuracy: 9/10 (since it shows the scene where Yasmela is on the street, before meeting Conan).

Specificness: The image captures specific details: cobblestones, lanterns (cressets), buildings with arches, the woman’s attire (long red dress, sword). The text specifies the street at night with cressets, so the image matches the specific setting. 8/10 (could be more precise in elements, but it’s pretty specific).

Action: The woman is walking, which is action. The image has a sense of movement. 7/10 (it’s active but not as dynamic as some scenes).

Sexiness: The woman is in a flowing dress, but it’s more historical than “sexy” in a typical sense. Conan fans might like the regal, warrior-princess vibe. 6/10.

Appeal to Conan Fans: The image has a warrior (Yasmela), sword, historical city. Conan fans like the action and setting. 8/10.

Awesomeness: The scene is visually impressive with lighting and composition. 9/10.

Coolness: Cool because of the detailed, cinematic art style. 9/10.

Wait, let’s verify. The text says she’s walking down the street at night, cressets lighting the street, cobblestones. The image matches that. The sword is there (text says she has a sword). The buildings match the “city of Khoraja” (textual context). The lighting is dusk/night. The woman is the princess, dressed in a dress, which matches her attire described (silken cloak, velvet cap, but the image shows her with a long dress—maybe the cloak isn’t in the image, but the dress is). Wait, the text says: “wrapping a voluminous silken cloak about her lithe figure and donning a velvet cap” but in the image, she’s in a long dress with no visible cloak. Wait, the image’s woman is in a long red dress, which could be the cloak? Or maybe the cloak is a detail, but the image is the street scene. The text’s final paragraph is about Yasmela walking on the street, so the image shows her in the street, which is the setting. The text mentions the street is “lighted by cressets placed at regular intervals”—the image has lanterns, which match cressets. The cobblestones are present. The distant dome is part of the city. So the image accurately depicts the scene where Yasmela is walking, which is the scene before meeting Conan.

Physicality: No extra limbs, nonsensical weapons. Sword is a normal sword. The woman has normal proportions. Buildings have correct architecture. Good physicality. 9/10.

Artistry: The lighting is golden hour, misty atmosphere—very artful. 9/10.

Poeticness: The scene has a poetic, dreamy quality. 8/10.

Textual Accuracy: 9/10 (shows the street at night with cressets, cobblestones, buildings—matches the setting where Yasmela is walking).

Specificness: The image’s specific details (cobblestone pattern, lantern arrangement, building arches) match the text’s description of the street. 8/10.

Action: The woman walking is action. It’s not a dynamic action scene, but it’s active. 7/10.

Sexiness: More about regality and grace than sexiness. 6/10.

Appeal to Conan Fans: The woman as a princess warrior, setting reminiscent of Conan’s world. 8/10.

Awesomeness: The image is very cool and visually stunning. 9/10.

Coolness: The image is cool due to its detailed art style. 9/10.

- Artistry: 9

- Poeticness: 8

- Physicality: 9

- Textual Accuracy: 9

- Specificness: 8

- Action: 7

- Sexiness: 6

- Appeal to Conan Fans: 8

- Awesomeness: 9

- Coolness: 9The model would often spit out long thinking text about its scoring due to its trained-in tendency to repeatedly reconsider and verify its output during the think stage; prompting it not do to this was not very effective. But still, it managed to score each image in less than a minute on average.

Results

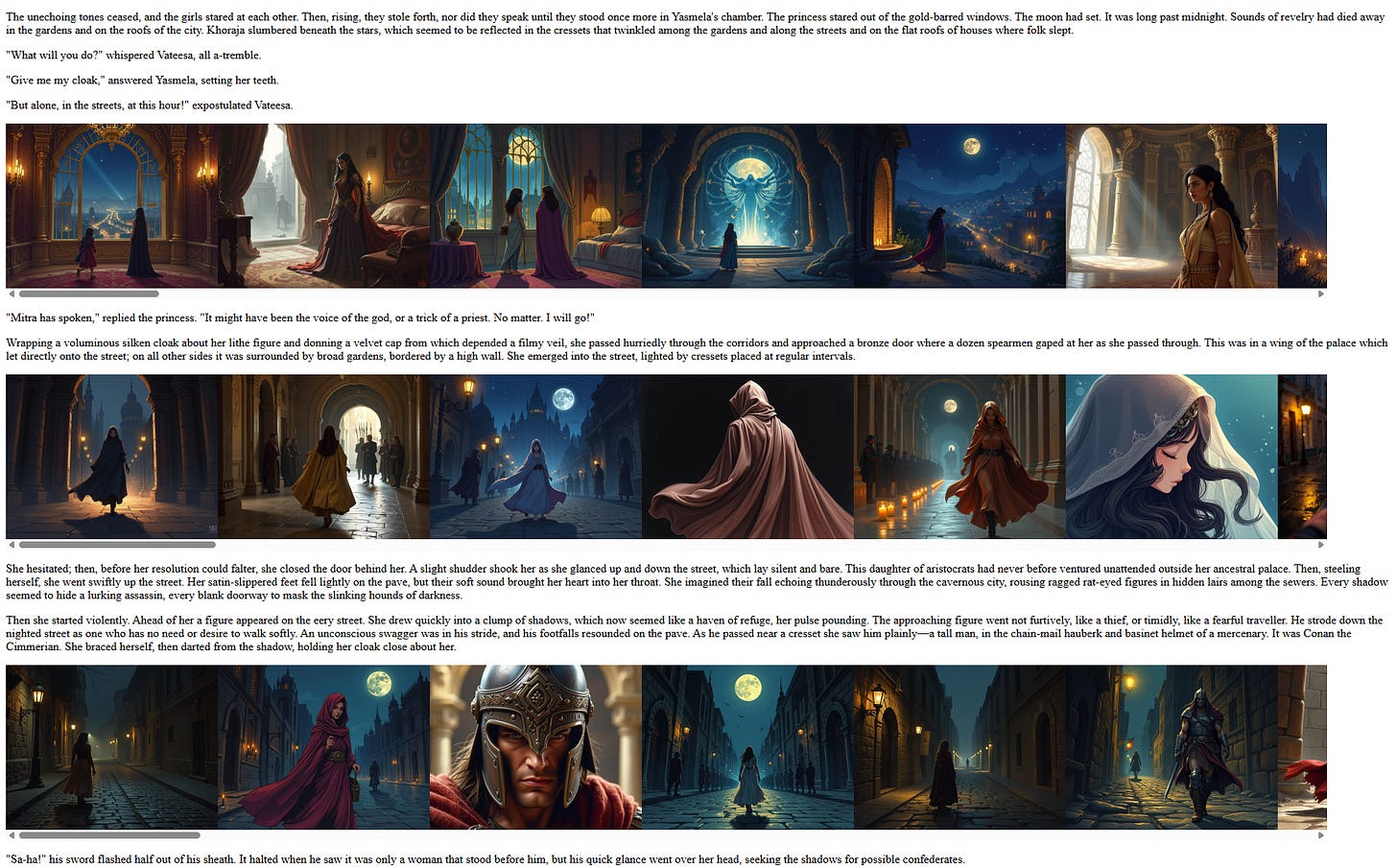

I trained models based on Black Colossus chapter 1, then scored and ranked the images for Black Colossus chapter 2. The results were worse than random choice. So I combined the data and used cross-validation to make the best possible hyperparameters for the model: potentially, cheater-level good, knowing the “correct” answers in advance. Still not great in terms of metrics. Here’s a screenshot of some of the ranked images with accompanying chapter text:

The better image is supposed to be furthest left. You can make your own decision as to how well the model did; bear in mind we’re selecting from among many images which are perhaps not the greatest in the first place. Personally, I’m satisfied with these choices even though my own choice is only ranked in the top 3 in one of these sliders; picking from among the top 3 would have given a good enough image in most instances. Metrics-wise, the trained model got a classification score of 0.62. Simply adding up all the individual categories got a classification score of 0.58.

The ideal score would be 1.0, ranking my top choices as best every time. An average score would be 0.5—the equivalent of random ranking. I am not particularly satisfied with 0.62, which would only put my favored image in the top half something like 2/3rds of the time.

Here are the weights of the model I got, normalized to average 1.0:

[ 0.00000000e+00 -2.42791548e+00 4.24313942e+00 2.66535155e+00

3.55260874e-01 1.73895525e+00 2.04924817e+00 -5.50081753e+00

6.87679957e+00 -2.18142223e-05]

Artistry: 0.000

Poeticness: -2.428

Physicality: 4.243

Textual Accuracy: 2.665

Specificness: 0.355

Action: 1.739

Sexiness: 2.049

Appeal to Conan Fans: -5.501

Awesomeness: 6.877

Coolness: -0.000Note that I used a combination of L1 and L2 regularization here. L1 regularization will tend to drive the weights of the less useful mostly-redundant categories to precisely zero, giving us an idea of what categories we can eliminate from the language model scoring entirely, making our scoring pass faster. It looks like Artistry, Specificness, and Coolness suck as categories—of course, removing them from the scoring may change how other things are scored by the language model, so this is not a foolproof strategy! Physicality—the absence of weird image generation artifacts like extra legs, extra fingers, and flying swords—rocks face, as does Awesomeness. And the negative weight on Poeticness and Appeal to Conan Fans is wild. It may just be the linear model overfitting—subtracting similar features to extract meaningless noise in the training data—but it may also be that images the language model thinks are poetic and appealing just aren’t very good illustrations when you’re trying to illustrate specific scenes instead of giving grand establishment shots of ancient desert ruins and muscly sword-wielding barbarians. I’ll likely look into this by regenerating some scores, particularly of the selected images, and scoring and modeling chapters 3 and 4 as well.

Source code

In case it helps you in your projects to rank stuff or reverse engineer other people’s ranking systems, here’s the code to generate the scoring model:

# Simplified code to just build a model from both chapters via cross-validation and test it.

# Testing on chapters not used to generate the model would be more valid.

import numpy as np

import torch

from collections import defaultdict

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import RandomizedSearchCV

from sklearn.metrics import accuracy_score

def get_X_y_for_scores(score_array):

paragraph_map = defaultdict(lambda: {’winners’: [], ‘losers’: []})

for image_score in score_array:

filename, paragraph, scores = image_score[’filename’], image_score[’paragraph_number’], image_score[’score_array’]

if paragraph == 26: # Bad data, since the winner is a mid-paragraph image.

continue

if filename[0:2] == ‘ch’: # The selected images are named something like ch2-14.5-nocrop-0086.jpg; the unselected 1747561806_t_t_f_0083-0086.

paragraph_map[paragraph][’winners’].append(scores)

else:

paragraph_map[paragraph][’losers’].append(scores)

X = []

y = []

for paragraph_scores in paragraph_map.values():

for winner in paragraph_scores[’winners’]:

for loser in paragraph_scores[’losers’]:

#print(winner, loser)

X.append(np.concatenate([winner, loser]))

y.append(1) # If first item wins, score 1.

X.append(np.concatenate([loser, winner]))

y.append(0) # If second item wins, score 0.

return X, y

METRICS = [ “Artistry”, “Poeticness”, “Physicality”, “Textual Accuracy”, “Specificness”,

“Action”, “Sexiness”, “Appeal to Conan Fans”, “Awesomeness”, “Coolness”, ]

def print_coefficients(model):

first_minus_second = model.coef_[0, 0:10] - model.coef_[0, 10:20]

normalized_coef = first_minus_second / np.sum(first_minus_second) * 10 # normalize to 1 each, 10 total.

print(normalized_coef)

for n in range( 0, len(METRICS) ):

print(’{}: {:.3f}’.format(METRICS[n], normalized_coef[n]))

scores_ch1 = torch.load(’ch1_scores_fromlog.pt’, weights_only=False)

scores_ch2 = torch.load(’ch2_scores_fromlog.pt’, weights_only=False)

X, y = get_X_y_for_scores(scores_ch1 + scores_ch2)

model = LogisticRegression(penalty=’elasticnet’, solver=’saga’, max_iter=4000) # Allows for L1+L2 combined penalty.

clf = RandomizedSearchCV(model, {’C’: [0.03], ‘l1_ratio’: [0.5,0.6,0.7,0.8,0.9]})

clf.fit(X, y)

print(clf.best_estimator_)

print_coefficients(clf.best_estimator_)

print(’Accuracy (note: this data was used in training, so number is not that meaningful):\n’,

accuracy_score(y, clf.predict(X)))Output:

LogisticRegression(C=0.03, l1_ratio=0.6, max_iter=4000, penalty=’elasticnet’,

solver=’saga’)

[ 0.00000000e+00 -2.42791548e+00 4.24313942e+00 2.66535155e+00

3.55260874e-01 1.73895525e+00 2.04924817e+00 -5.50081753e+00

6.87679957e+00 -2.18142223e-05]

Artistry: 0.000

Poeticness: -2.428

Physicality: 4.243

Textual Accuracy: 2.665

Specificness: 0.355

Action: 1.739

Sexiness: 2.049

Appeal to Conan Fans: -5.501

Awesomeness: 6.877

Coolness: -0.000

Accuracy (note: this data was used in training, so number is not that meaningful):

0.6238720262510254Enjoy!